Computers Really Are Taking Our Jobs

The last great recession began in December 2007 and officially ended in June 2009. But it doesn’t feel like it’s over. Even though productivity and in many cases corporate profits have rebounded, unemployment and underemployment remain high. We have seen this pattern after each recession since the 1990s and it has been dubbed “jobless recovery.”

There are many arguments about why unemployment remains so stubbornly high. Some explain that because the economy is weak employers are afraid to grow and reluctant to hire. Others have argued that the economy is facing stagnation and a failure of innovation, especially relative to booming economies in Asia and India. For educators, the “skills gap” explanation has been the most pertinent. This posits that employers now require different, generally higher skill levels from their workers and education is not providing what is needed. Consequently too many people are simply unprepared for work in the 21st Century.

A new book, Race Against the Machine, by MIT Sloan School of Business professors Erik Brynjolfsson and Andrew McAfee puts an additional explanation in very clear and accessible terms. It’s not simply that we’re afraid to grow, or stagnating, or even unskilled. Instead, they argue, we’re out of work because, as we have feared for some time, computers really are taking our jobs, and they’re getting better at it every year. Computers are faster and cheaper than people at repetitive tasks, like filing documents, and building things on an assembly line. And as they get more powerful, they can take on more sophisticated repetitive tasks like reading X-rays, analyzing legal documents, and geocoding address data. And equally, if not more important, computerization allows for the reorganization of tasks in a way that eliminates the need for many jobs. Internet shoppers don’t need store clerks; Google searchers don’t need librarians; and Facebook friends don’t need letter carriers.

This technological revolution is possibly more dramatic than the Industrial Revolution that transformed world economies and generated a period of mass dislocation and loss of work in the 18th and 19th centuries.

“Technological progress—in particular improvements in computer hardware, software, and networks—has been so rapid and so surprising that many present-day organizations, institutions, policies, and mindsets are not keeping up…Computers are now doing many things that used to be the domain of people only. The pace and scale of this encroachment into human skills is is relatively recent and has profound economic implications. Perhaps the most important of these is that while digital progress grows the overall economic pie, it can do so while leaving some people, or even a lot of them, worse off.“

Brynjolfsson and McAfee speculate that we are now entering a time of rapid, even astounding, growth in computing power and the potential for new technology. The Google car that drives itself and the Watson Jeopardy-champion supercomputer, are just the edge of a wave of super technology emerging as the exponential growth of computing power begins to take off.

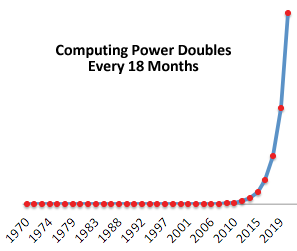

Two concepts, they argue, are key to understanding how computing power is beginning to explode. The first is Moore’s Law, the codification of the observation, first noted by Gordon Moore of Intel, that computing power grows exponentially. It’s generally accepted now that it’s doubling every 18 months.

After recognizing that computing power grows in this way, the next step is to understand how dramatic this kind of growth ultimately becomes. In the first few iterations, this exponential growth appears comprehensible and manageable, as it does in the little green bar graph above, but continuing at this same pace, growth soon becomes incomprehensibly rapid. The graph on the right shows what computing power looks like when doubling every 18 months from 1970 to 2020. All of the growth in the first 30 years is so completely dwarfed by the rapidly escalating pace of growth from 2010 to 2020 that it is invisible.

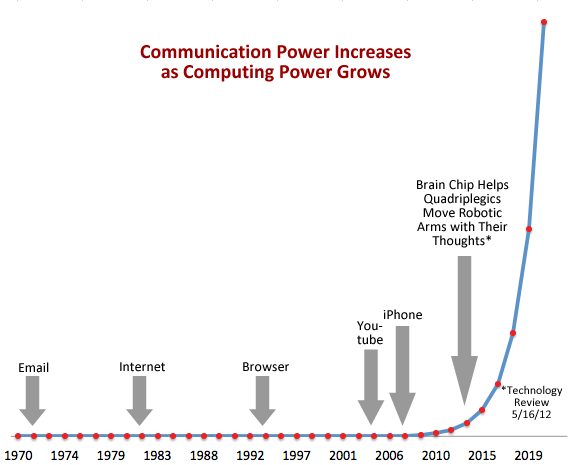

It’s difficult to understand what this kind of growth of capacity means without tying it to actual technological change. So, on graph below, I have labeled some key points in the recent history of communications. Email first began in the scientific and academic community in the early 1970s. The internet was born in the early 1980s and opened for commercial use within a decade. The first easy-to-use web browser, Mosaic, was introduced in 1993. Within 20 years, each of these technologies became ubiquitous. They have transformed the work of communication and led to complete reorganization and tremendous loss of jobs in fields like printing, publishing, telecommunications, and the U.S. Postal Service.

As computing technology continues to become ever more powerful we have seen innovations like YouTube, and streaming video generally, force the reinvention of broadcasting, and the iPhone, a full-powered computer in your pocket, begin to revolutionize more industries than I can list here. But the pace of growth doesn’t stop. Last month MIT’s Technology Review published an article titled “Brain Chip Helps Quadriplegics Move Robotic Arms with Their Thoughts” showing that implanted computer chips can now actually help people to perform complex real-world tasks. In other words, people can now communicate with computers by their thoughts alone. As far-fetched as this kind of communication seems to us today, it is no more preposterous than the first email or the first web page. These first iThought experiments have been done with quadriplegics eager for the freedoms this technology will bring. But over the next 20 years, as computing power continues to grow and costs to fall, this capacity could become available to everyone.

As well as becoming more powerful, computers are also becoming exponentially more energy efficient. According to a recent Technology Review article on this topic,

“If a modern-day MacBook Air operated at the energy efficiency of computers from 1991, its fully charged battery would last all of 2.5 seconds. Similarly, the world’s fastest supercomputer, Japan’s 10.5-petaflop Fujitsu K, currently draws an impressive 12.7 megawatts. That is enough to power a middle-sized town. But in theory, a machine equaling the K’s calculating prowess would, inside of two decades, consume only as much electricity as a toaster oven. Today’s laptops, in turn, will be matched by devices drawing only infinitesimal power.”

| Technology Review, “The Computing Trend That Will Change Everything” 4/12/2012 |

Implanted computers powered by the energy of our own bodies are thus not inconceivable and if this revolutionary device is not created, others that we cannot currently imagine surely will be. Imaginable and as yet unimaginable creations like this will drive change in the world of work through 2020. In my next post I will discuss Byrnjolfsson and McAfee’s speculations about just how work will be changing over the next decade and how we can and should prepare. I will also discuss some of the implications of a showdown in the growth of computing power. Logic tells us that exponential growth cannot continue forever, and scientists foresee a clear endpoint to the growth of computing power using current technology. When this happens we will reach the end of this Industrial Revolution and perhaps have a breathing space before the onset of the next one.